Back to blog!

From time to time I get

some cool requirements from customers. That makes me think and do what I like

to do: analyze, design, build and make everyone happy :-)

In this case, I got a

challenging requirement for an automated integration between several source

systems and HFM. Of course, using our favorite fishing tool, FDMEE. Yes, I know, everybody is now thinking about Cloud and more Cloud. However, FDMEE is still a very strong EPM integration tool supporting Hybrid and survives in 11.2.

I won't go into much

detail so I can keep it simple.

The customer had already

all automation built using open batch files and different direct data extracts.

However, there was no email notification solution implemented. It was hard for

them to get results without accessing FDMEE.

We started to gather the

requirements and we came up with the following list:

- Bypass missing mappings and send summary by email

- Bypass invalid HFM intersections and send the Intersection Check report by email (html format)

- Identify the CANNOT WRITE intersections (the blue ones) and include them in the email body

- Show Process Management information about the HFM

Process Units where data cannot be written. Basically, show Review Level

so the users are aware of what is happening

- Build a data-tracking solution for HFM

Phased Submission

The goal is to cover all

requirements in future blog posts but today I will start with the key one, the data-tracking. Personally, I think this would be a great functionality for Cloud Data Management as well. It fills a gap in the data audit in FDMEE (you can see how cell data changes in HFM)

Requirement - Data

Tracking for HFM Phased Submission

This is

the requirement word by word:

To maintain control

over the phased close process, a data tracking service is required to track data

changes impacting Gross Margin and EBITDA from WD2 and WD4

respectively.

We need the following key controls:

- The phased submission process should allow for a soft Gross Margin and EBITDA close whilst tracking changes to the underlying data impacting Gross Margin and EBITDA from WD2 and WD4 respectively

- The data load monitoring service must be relevant i.e. we DO NOT want to track all financial statement data changes and report them to users

- Appropriate stakeholders must be notified of the data changes once the deadlines have been passed

- This process must not disrupt the source trial balance data load to HFM i.e. the trial balance loaded must be complete and accurate

- Sufficient provision should be built into the design to switch over to a hard WD2/WD4 close if desired

Let's give some shape to

these words as we want to keep everything simple. HFM will be using phased

submissions to manage the data flow with review levels acting as a trigger event

for data tracking.

- Review Level 1 (RL1): Both the automated and manual FDMEE load processes are running and exporting data to HFM.

- Review Level 2

(RL2) Soft

Close - the promotion to RL2 will now act as a trigger to

activate the data monitoring script that will track data changes when data is exported to HFM.

- Review Level 3

(RL3) Hard

Close – at RL3 the FDMEE Global user system account no

longer has access to load data to HFM.

Phased Submissions at

a glance

The

term phased submissions in HFM is synonymous with managing data through stages

of the close cycle. In a typical organization this would involve:

- Phase 1 – Promote inter-company data

- Phase 2 – Promote Trial Balance data

- Phase 3 – Promote Balance Sheet movement data/cash-flow

- Phase 4 – Promote disclosure notes

Our Customer

The following tables show the different phases and details:

WD2

|

WD4

|

Phase 1

|

Phase 2

|

Promote Gross

Margin data

|

Promote

EBITDA data

|

Soft close

- RL2

|

Soft close

- RL2

|

Enable

data monitoring on accounts that impact Gross Margin

|

Enable

data monitoring on accounts that impact EBITDA

|

Automated

notifications informing users of changes to Gross Margin data

|

Automated

notifications informing users of changes to EBITDA data

|

WD6

|

|

Phase 3

|

Phase 4

|

Promote Trial Balance data

|

Promote

USGAAP/Disclosure Notes

|

Hard close

– RL3

|

Hard close

– RL3

|

Lock trial

balance data

|

Lock

USGAAP adjustments and changes to disclosure notes

|

Stop all

email notifications

|

Journals/Offline

(excel) submissions

|

Using Custom HFM

dimension to Link Accounts and Phases

A

conscious decision was made to try and avoid tagging submission groups on the

account dimension as invariably accounts end up being incorrectly tagged (or

Group Finance change their mind!) which results in the need for an application

metadata update to correct the submission group assignment (used to profile

phased submissions).

Instead

a spare custom dimension was leveraged, in this case Custom6 (Custom5 is used

to evaluate constant currency) with the intention of using FDMEE and SQL

mapping scripts to load data to each of the Custom6 dimension members

respectively. This means that we can now adjust the FDMEE mapping for phased

submission purposes without the need to apply HFM metadata updates.

Please, note that this approach will only work dynamically where the chart of accounts adheres to

a rational numbering sequence e.g. Revenue account are 1* series, Cost of Sales

accounts are 2* series and OPEX accounts are 3* series etc. Other approaches are also valid.

Translating the Requirements for the Integration Guy

Although solution seems to be complex, design is the key. Basically, we need FDMEE to:

- Get the HFM Process Management details (Review Level for the different phases)

- When Soft Close, be able to compare new data set against last data set loaded. That will monitor all data load changes

- Auto-map missing mappings so the workflow can be completed

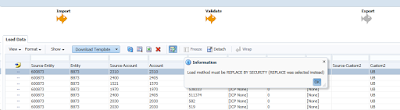

- Auto-map for invalid HFM intersections and identify the CANNOT WRITE intersections (Soft Close)

- Keep audit in FDMEE so missing mappings and invalid intersections can be easily filtered in the Data Load Workbench

- Send email notification with detailed results

In the next chapter we will go through the solution design in FDMEE, and then we will continue with some examples where you can see how it works and the outcome of this data monitoring solution.

Merry Xmas!

Merry Xmas!