Last day of the year, last post of the year.

This is the 5th part of my Migration Utility series. Is it the last one? I don't think so but it depends how much I use the utility :-)

Today I will reveal why no artifacts were actually migrated. Before we go the the matter I would like to summarize what we have been discussing so far:

- Part 1: Introduction to the utility and general considerations

- Part 2: Installation and ODI configuration

- Part 3: Migrating an HFM application. A real case study

- Part 4: Going through initial ODI steps. Creation of the target HFM application in FDMEE

Update [4/1/2016]

Oracle has released new version of the migration utility. All details are in patch Patch 22659123

One of the first stoppers we found was that even if the target HFM application was created and configured in FDMEE, no artifacts such as import formats or locations were created. Why? it took me a while to find the root cause and then confirm with Oracle that we were facing a bug. This is supposed to be fixed in the next patch for the migration utility. In the meantime you can apply the workaround we will use.

Last step we detailed in part 4 was the configuration of target application dimensions and options. Before we explore the issue with artifacts I would like to briefly go through 3 configuration steps:

Create Source System Adapter

This step creates a source system for the Open Interface Adapter. This will be used for historical data migration in case we proceed.

We can see the new source system in FDMEE:

Extract Categories and Periods

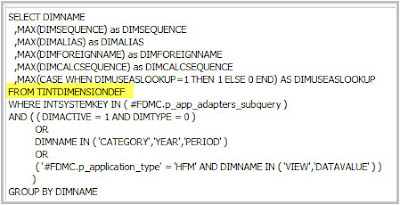

Categories are created based on what is stored in FDM tables TPOVCATEGORY and TPOVCATEGORYADAPTOR.

It's important to remark that application category mappings will be only migrated if target category in legacy FDM is set to a target value different than [EMPTY], so actually if the mapping is correctly defined:

Periods work in a similar way although global and application periods are migrated in two steps rather than one. They are extracted from tables TPOVPERIOD and TPOVPERIODADAPTOR.

So after our categories and periods have been migrated, let's have a look in FDMEE:

Categories look fine: 3 out of 3!

In any case, few things you should know:- If the category exists, it will not be created. In my case Actual already existed so it has not been created. Budget and Forecast are new categories

- Category keys (CATKEY) will not probably match. New categories are created using the CATKEY sequence of FDMEE.

Regarding Periods, there were lots of global periods configured in FDM with target month and year as [Empty]. These periods are migrated as global periods although only periods with valid mappings at adapter level are migrated to FDMEE as periods at target application level.

In the image above we can see all global periods added (only new are added). You may want to setup your periods correctly before migrating. Otherwise you will get only few of them as target application period mappings:

And now, artifacts not migrated

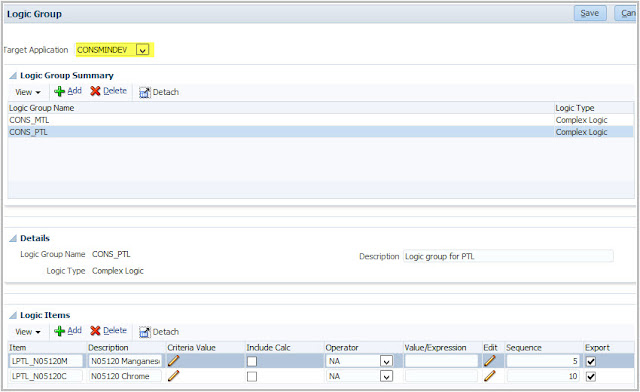

If we have a look to the ODI scenario steps, next artifact to be migrated are the logic groups.

We do have two logic groups in legacy FDM so we should have them also in FDMEE:

But no, we don't have them in FDMEE :-)

I already said in the first post of this series that this is a technical utility so any research will have to be done at technical level either ODI or Database.

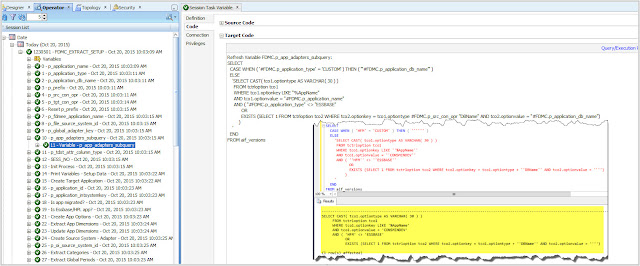

Let's start then with the SQL query used by ODI to extract the logic groups:

Before running the query it's good to have a look and see which filters are used. There is one which took my attention: WHERE parttype = 2

So let's see in table TPOVPARTITION which locations have partition type 2, well actually, what is that partition type property?

With a simple SQL query I can see that there are 4 locations having logic groups assigned and they have parttype = 1...

Ok, root cause found: logic groups are not extracted because the filter executed by ODI will exclude locations with partition type 1. But again, what is partition type? After some researching in legacy FDM we got the answer.

Do you remember about Bulk Insert or SQL Insert options when defining locations in FDM? So there you have your answer. Column parttype stores the location load type. A load type "SQL Insert" is stored as 1 and a load type "Bulk Insert" is stored as 2. As FDMEE mostly uses Bulk inserts for better performance, this was missed in the migration utility...

Trying it again

So now that we think we know the issue, let's try it again!

First we need to update table TPOVPARTITION in the source database (or we change types one by one in the FDM application):

update [CMFDMDEV].[dbo].[tPOVPartition]

set parttype = 2

Then we need to delete the target application in FDMEE in order to avoid issues:

This will launch a purge process in FDMEE which will delete the target application in FDMEE and all related objects already migrated (periods, categories, etc.)

Time to execute the ODI scenario again:

It's 31st of December, we all should be enjoying our families and partying so let's show it:

Wow, groups created!

Any other artifacts? Import Format failed!!! ODI error messages tells me something about size...

ODI operator shows me that import formats were extracted to the staging table (C$...) but it failed when loading into FDMEE table TBHVIMPGROUP.

In deed, we can see them in the staging table:

I suspect that column IMPGROUPKEY is the guilty. If I check size in the staging I can see it's different than the size in target table but same as source table tBhvImpGroup in FDM:

So which is the issue then? it's obvious that the prefix is making the staging column bigger and therefore raising the error, especially import format AUSTRALIA_PHASED which length is 21 when including the prefix:

Let's test then with prefix "C_":

And now?

Here it is! All import formats have been migrated. However you will notice that FDM import scripts are not valid anymore. They were implemented in VB Script (uss extension). We now have Jython so time to re-write scripts :-) Here you have an advice...do you really need them? analyze your scripts and see if you can re-design your FDMEE in order to avoid them. They might be good candidates to be replaced by new out-of-the-box functionality.

Now that my objects have been migrated it's time to review them, perform some manual actions where needed and why not, migrating historical data :-)

2016 is here and new challenge are coming.

I will be presenting and enjoying KSCOPE16 in Chicago and I hope to see you all there!

Have a happy year!