Installing the Patch

First thing you have to do is read the Readme.html file :-)

This patch has some pre-requisites which I have to go through. After seeing installation steps, it looks simpler than previous PSUs.

I'm not going to cover how to apply the patch, just make sure you get Opatch succeeded message:

I don't really understand why there is an additional step to copy batch template file for new feature to execute scripts from command line. I was expecting to be automatically when applying the patch:

In my case, my environment is single server so no need to copy any jar files. As per Oracle's readme:

If your deployment is a distributed environment where Hyperion Financial Management and Planning are on different servers than FDMEE, copy the following jar files:

Copy files from the Hyperion Financial Management server on EPM_ORACLE_HOME\common\hfm\11.1.2.0\lib to the same directory on the FDMEE server.

Copy files from the Planning server on the EPM_ORACLE_HOME\common\planning\11.1.2.0\lib to same directory on the FDMEE server.

You can check versions in Workspace after patching:

In my case, I had to patch HFM and Planning as well.

Action Scripts for HFM

Actions scripts are used to integrate with HFM and they are located in the FDMEE Server:

The three above has been modified in PSU210:

Why this is important?

Sometimes you have to adjust these action script files. This is a valid option but you need to take into consideration that changes applied are undone when the patch overwrites the files. Therefore, if you have changed any of the three scripts, you will have to re-apply changes after patching.

HFM_LOAD.py

Just minor changes to fix bug related to loading journal descriptions.

HFM_EXPORT.py

Just minor change to fix but related to order of records exported in the DAT file.

HFM_CONSOLIDATE.py

This change has been done to correctly cast parameter options as boolean. In previous version, Calculate and Consolidate were not executed correctly.

Import Formats

New import types...new import format options:Please bear in mind that this is currently supported for File type only. Other sources like Universal Data Adapter don't support it yet.

They have also added a search icon for Source and Target so we don't have to select both Source and Target types. This is a good enhancement but it has one drawback. They did not include a column to see the application type:

You can also see new options for new features such as skip row for delimited, using period/years in row, Tilde as file delimiter:

TDATASEG and TDATASEG_T Tables

We can now load Text data to planning. FDMEE used to have column AMOUNT and AMOUNTX to load numeric data so new columns have been added to support Text, Date, and Smart Lists.

SAP HANA

SAP HANA integration is now supported in the Universal Data Adapter:

Important: there is no documentation yet, neither in the patch readme so I will perform actions below on my own risk.

SAP JDBC Driver

I haven't found any SAP HANA JDBC Driver (supposed to be ngdbc.jar). I find this obvious as I don't think Oracle is allowed to deliver SAP drivers. If you are a SAP customer or has access to SAP market, you will be able to download it.

As part of the configuration, some ODI files need to be imported into both Master and Work repositories.

SAP HANA (Master Repository)

We first need to import the new technology for SAP HANA (I have used INSERT_UPDATE Mode but you can use INSERT as well as this a new object):

Having a look at the Import report, I can deduce this a copy of an Oracle technology :-)

After the technology has been imported, we need to import the Data Server and Physical Schema for HANA:

You should get a report like this:

If everything went OK, you will see new objects created in Topology:

SAP HANA (Work Repository)

Next steps are to import UDA Models Folder and UDA Project which in their new version have SAP HANA models and projects respectively.

Important: these two imports should be performed in INSERT mode. Otherwise, existing UDA configuration will be deleted. I will assume that there were no changes for other UDA Data Sources in PSU210, so I don't need to update any model or project existing.

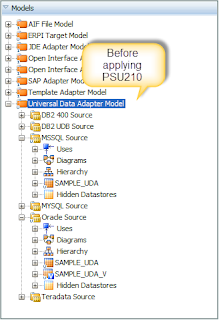

For example, this is my Model structure before applying the patch:

As you can see, I have UDA already configured for Oracle and MSSQL.

If I import new model folder in INSERT_UPDATE mode:

Then existing objects will be deleted:

Import must be done in INSERT mode if you don't want this to happen:

In that way, only new SAP_HANA model/sub-model will be imported:

And both new and existing Models will be available:

Same applies to UDA Project, we only want to import the new project for HANA:

The new HANA project is now available in the Project list:

Some bugs fixed

It's not all new features in a PSU. We need to fix some bugs as well :-)

Duplicate Mappings

It seems that now get errors when adding duplicate mappings:

Even if we try to import mappings from files:

We get an error pop-up window:

Update 9/1/2017: it seems that some people are able to have duplicate mappings after applying the patch. Indeed I could create now duplicate maps. The reason is that validation of duplicate maps does not work if there is an existing mapping already saved in the system. So if you add two new maps without saving the first one before adding the 2nd one, it will work. If you add new map, save it, and then add the duplicate one, the two are now added...

In other words, it seems that is not validating against existing mappings but just new ones. Probably a bug. Let's wait until Oracle's feedback.

Update 10/1/2017: a bug has been filed for this issue. Hopefully a PSE will be released soon.

Update 13/1/2017: it seems that bug is related to MSSQL only. Oracle database should work fine

I will leave it here for today. I hope you have now a good idea of what you get with new patch.

Merry Xmas!