Life keeps me busy and it's getting hard to find some time to post which I feel sorry about.

Some time ago, I delivered an ODTUG Webinar about extending source filter functionality for Universal Data Adapter (UDA). As a "new" feature in FDMEE, functionality is a bit limited if you compare with what you can do when having a script to extract data from your source Table/View. For this reason, customization can be very helpful.

I wanted to have some more use cases before I shared with you a good one. As you can imagine, I finally got it :-)

The main goal if this post is to show how customizing source filters can help you in many different ways. Not sure about that? I hope you get a clear idea after reading it.

As this post is about UDA, I would recommend you to read my previous posts in case you haven't:

- Universal Data Adapter (UDA), direct DB integration - Part 1

- Universal Data Adapter (UDA), direct DB integration - Part 2

- Universal Data Adapter (UDA), direct DB integration - Part 3

There are many ways of extracting data depending on very different requirements but we all agree that extracting Trial Balance Year-to-Date (YTD) balances is the most common one.

What about if your reporting and analysis process need more detail that TB figures have? we probably need to jump into Transactional data concept. I know, that means bigger volumes of data, but if you need it, you have to extract it, and it's our job to build a robust, maintainable, flexible, and well performing solution for that.

I don't want to dive deeper into functional details so I will show you the high level technical solution:

As you can see above, we have to extract data from two different Oracle regular views:

- View with GL data

- View with SALES data

These views have been created on top of IQMS ERP database. If you google it, you already know we are talking about an ERP solution for Manufacturing. Therefore, extracting huge number of transactional records. Time to put FDMEE on the racing track!

UDA is the source adapter used to perform this data extract. It provides a seamless integration between source database tables and views. And it's FDMEE's RDBMS independent! IT team was impressed about being able to integrate the source IQMS database (Oracle 11g) with FDMEE database (MSSQL 2012) in such an easy way. And the good thing? UDA uses Oracle Data Integrator (ODI) which can extract and execute filters on the source DB, than means, take advantage of the source DB engine and transfer lower volume of records between the two systems.

We will be filtering data based on the Location selected by the user in the FDMEE POV (Column COMPANY). Besides, we want to filter data by transaction date (Column JOURNAL_DATE), and here is where a decision has to be made.

Extracting YTD transactions

Extracting YTD transactions

I guess you are all familiar with YTD concept. I will not make difference between Balance Sheet, Income Statement or Sales. I will keep it simple and show how the functional requirement "Extract YTD figures" turns out into the technical requirement.

Technically, extracting YTD transaction figures means filtering data between a range of transaction dates:

How can we implement this filter using UDA? How can we map one target period with multiple source periods? basically, in two ways (maybe more).

- Source Period Mappings (Standard)

- Source Filters (Needs some customization)

Which one is better? what do we mean with "better"? which one would you choose?

Source Period Mappings

Since ERP integrations and Source Adapters were introduced in the product, it makes sense that, in the same way we create Global/Application period mappings, we can create source period mappings.

Basically we map each Point-Of-View Period with source period(s) we want to extract data from. We can even create multiple calendars for each source.

For the UDA, we have to specify GL Year, GL Period and GL Period Number.

How does FDMEE know which are the columns in our source View/Table used to filter periods? Easy, when we setup the UDA, we will tell FDMEE which columns are classified as Year, Period and Period Number. This is only needed if we are going to use source period mapping table to filter data. Otherwise, you don't have to classify the columns and, of course, you don't have to define the period mappings.

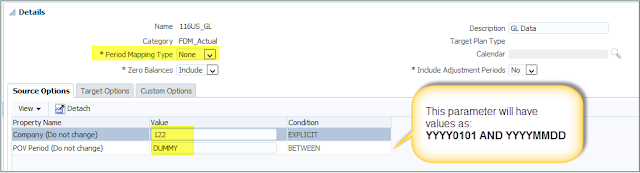

Once this configuration is done, when we configure the Data Load Rules (DLRs), we can select if we want to use the source period mapping to filter data (Period Mapping = Explicit) and which calendar, or if we want to extract all data from source (Period Mapping Type= None):

So now that we know the basics, how would we define the Source Period Mapping table to extract YTD balances from the Oracle Views?

We would need to define the mapping table as:

- Source Period Mappings for POV Period Jan-2016

- 01/01/2016, 01/02/2016,...,01/31/2016

- Source Period Mappings for POV Period Feb-2016

- 01/01/2016, 01/02/2016,...,01/31/2016

- 02/01/2016, 02/02/2016,...,02/29/2016

- ...

Let's be a bit more creative...Do we really need to define source period mappings at date level? why don't we define them at month level?

For example, we could define new column in the View called PERIOD_NAME. Then we can easily define period mappings as:

For example, we could define new column in the View called PERIOD_NAME. Then we can easily define period mappings as:

- Source Period Mappings for POV Period Jan-2016

- Jan-2016 (value of PERIOD_NAME column)

- Source Period Mappings for POV Period Feb-2016

- Jan-2016 (value of PERIOD_NAME column)

- Feb-2016 (value of PERIOD_NAME column)

- ...

Then, what is really important here?

Let's see how FDMEE would import data if we use the source period mapping table (Ex: Dec-2016)

- Import data for Jan-2016

- Import data for Feb-2016

- Import data for Mar-2016

- ...

- Import data for Dec-2016

- Transform all data

- Validate all data

- Export all data

- Load all data

Each import step above will execute a SQL query against the source View, that means, extracting, filtering, and loading into TDATASEG_T.

Could we simplify it to just one import step which performs the extract for YTD figures in one shot?

Obviously, answer is YES :-)

How? keep reading...

Source Filter for UDA

If we think about a SQL query to get data filtered from the source view, we would have something like this:

We can identify two columns which will be filtered: COMPANY and JOURNAL_DATE.

If we think about a SQL query to get data filtered from the source view, we would have something like this:

We can identify two columns which will be filtered: COMPANY and JOURNAL_DATE.

First thing we did was to define an additional column in the View: FILTER_DATE

Initially, the reason for creating this column was to capture some special cases where Journals were posted in Date A but they had to be imported in Period B which did not match period where Journal was posted. Then, we reused that column in order to define our filter:

Once the column was created, we could create the source filters for our interface.

Source filters are defined in the source adapter as Parameters:

Values for these parameters are set in the Data Load Rule page:

It's very important that for using this approach, option Period Mapping Type is set to None. This option basically means that we will extract all data from the source but filtered by our source parameters.

In this implementation, end-users don't have access to Data Load Rule page so they can't change the values. Obviously, p_company value is static for each DLR, what about p_date? its value needs to be dynamic as it depends on the POV period user selects in Data Load Workbench.

How can we make this parameter value dynamic? Can FDMEE do that?

Let's see first how it works internally.

Why YYYYMMDD? Because it was quite close to the format PERIODKEY was stored (YYYY-MM-DD)

Start Date: as we are extracting YTD, the start date of the range will be always YYYY0101 (1st of Jan) where YYYY is the year of the POV period being processed.

End Date: this will be our POV Period Key which has been configured in the Global Period Mapping Table as the last day of each month.

Therefore, our parameter value should look like: BETWEEN 'YYYY0101' AND 'YYYYMMDD'

It's clear then, that PERIODKEY will be our driver to get the two values we need. This value is stored in the context object fdmContext:

Updating the table with parameter values is not difficult, you just need some SQL notions:

And the good thing? this is totally transparent for the end-user as the parameter value will be updated on the fly based on the period he/she selects in the Point-Of-View bar.

Values for these parameters are set in the Data Load Rule page:

It's very important that for using this approach, option Period Mapping Type is set to None. This option basically means that we will extract all data from the source but filtered by our source parameters.

In this implementation, end-users don't have access to Data Load Rule page so they can't change the values. Obviously, p_company value is static for each DLR, what about p_date? its value needs to be dynamic as it depends on the POV period user selects in Data Load Workbench.

How can we make this parameter value dynamic? Can FDMEE do that?

Let's see first how it works internally.

- DLR execution is launched

- FDMEE inserts all parameter values (not only source adapter but target options, etc.) into table AIF_BAL_RULE_LOAD_PARAMS

- DLR is processed and values are take from that table in the different actions

Before data is imported? yes. And, can we perform any actions before data is loaded? yes. How? Where? Event Scripts! That's the answer. The BefImport event script is executed just before data is imported so it looks like a perfect place where to make our parameter value dynamic.

In case you are not familiar with Event Scripts, you can find them in Script Editor (which is not the best place where to code BTW):

Updating the Parameter Value

In order to facilitate date filtering we decided to have dates as strings with format YYYYMMDD.Why YYYYMMDD? Because it was quite close to the format PERIODKEY was stored (YYYY-MM-DD)

Start Date: as we are extracting YTD, the start date of the range will be always YYYY0101 (1st of Jan) where YYYY is the year of the POV period being processed.

End Date: this will be our POV Period Key which has been configured in the Global Period Mapping Table as the last day of each month.

Therefore, our parameter value should look like: BETWEEN 'YYYY0101' AND 'YYYYMMDD'

It's clear then, that PERIODKEY will be our driver to get the two values we need. This value is stored in the context object fdmContext:

Updating the table with parameter values is not difficult, you just need some SQL notions:

And the good thing? this is totally transparent for the end-user as the parameter value will be updated on the fly based on the period he/she selects in the Point-Of-View bar.

Process Details showing "Importing Data for <NONE>"

If you execute a DLR with period mapping type option set to None, then you see something like this in the Process Details page (not the error! just the <NONE> in the process step):

If you execute a DLR with period mapping type option set to None, then you see something like this in the Process Details page (not the error! just the <NONE> in the process step):

I don't like this message as you don't know the period being processed. How can we change that?

This information is stored in table AIF_PROCESS_DETAILS just after data is imported into FDMEE. Again back to similar question we had before: How? and Where?

How? the process step message takes the value displayed from column ENTITY_NAME. We just need to update this value for current process id. Import step can be identified with value PROCESS_BAL_IMP in column ENTITY_TYPE. Period description is stored in fdmContext["PERIODNAME"].

Where? as the line is inserted after data is imported, event script AftImport seems to be the right place.

The following code can make that happen:

This information is stored in table AIF_PROCESS_DETAILS just after data is imported into FDMEE. Again back to similar question we had before: How? and Where?

How? the process step message takes the value displayed from column ENTITY_NAME. We just need to update this value for current process id. Import step can be identified with value PROCESS_BAL_IMP in column ENTITY_TYPE. Period description is stored in fdmContext["PERIODNAME"].

Where? as the line is inserted after data is imported, event script AftImport seems to be the right place.

The following code can make that happen:

And process details will show now:

Much better now, isn't it?

Conclusions

We showed two different approaches to extract data from multiple source periods (due to YTD requirement in our case).

The approach of defining source period mappings table has an impact on the number of data extracts executed by FDMEE, one for each source period. Besides, it requires defining the table with multiple mapping entries.

On the other hand, by using source filter for date column, FDMEE executes a single import step using a BETWEEN filter on the WHERE clause of the SQL query executed on the source. IMHO, a better solution. But again, it all depends on your requirements.

That's all for today. I hope you found this helpful. As always, I suggest you don't focus on this specific solution and try to take main idea to your specific requirements.

Sometimes, with a bit of creativeness and customization, we can get a nice, maintainable and flexible solution to meet our requirements regardless their complexity.

Enjoy!